Best Practices to Reduce Docker Images Size

INTRODUCTION

In this article, we will review several techniques to reduce Docker image size without sacrificing developers’ and ops’ convenience.

Docker images are an essential component for building Docker containers. Although closely related, there are major differences between containers and Docker images. A Docker image serves as the base of a container. Docker images are created by writing Dockerfiles – lists of instructions automatically executed for creating a specific Docker image. When building a Docker image, you may want to make sure to keep it light. Avoiding large images speed up the build and deployment of containers and hence, it is critical to reducing the image size of images to a minimum.

Here are some basic steps recommended to follow, which will help create smaller and more efficient Docker images.

1. USE A SMALLER BASE IMAGE

FROM ubuntu

The above command will set your image size to 128MB at the outset. Consider using smaller base images. For each apt-get installer or yum install line you add in your Dockerfile, you will be increasing the image size based on the size of the library is added. Realize that you probably don’t need many of those libraries that you are installing. Identify the ones you really need and install only those.

For example, by considering an alpine base image, the size of the image will get reduced to 5MB from 128MB.

2. DON’T INSTALL DEBUG TOOLS LIKE curl/vim/nano

Many developers will use the curl/vim tools inside the Dockerfiles for later debugging purposes inside the container. The image size will further increase because of these debugging tools.

Note: It is recommended that install these tools only in the development Dockerfile and remove it once the development is completed and is ready for deployment to staging or production environments.

3. MINIMIZE LAYERS

Try to minimize the number of layers to install the packages in the Dockerfile. Otherwise, this may cause each step in the build process to increase the size of the image.

FROM debian RUN apt-get install -y<packageA> RUN apt-get install -y<packageB>

Try to install all the packages on a single RUN command to reduce the number of steps in the build process and reduce the size of the image.

FROM debian RUN apt-get install -y<packageA><packageB>

Note: Using this method, you will need to rebuild the entire image each time you add a new package to install.

4. USE –no-install-recommends ON apt-get install

Adding — no-install-recommends to apt-get install -y can help dramatically reduce the size by avoiding installing packages that aren’t technically dependencies but are recommended to be installed alongside packages.

Note: apk add commands should have–no-cache added.

5. ADD rm -rf /var/lib/apt/lists/* TO SAME LAYER AS apt-get installs

Add rm -rf /var/lib/apt/lists/* at the end of the apt-get -y install to clean up after install packages. (For yum, use yum clean all)

If you are to install wget or curl to download some package, remember to combine them all in one RUN statement. At the end of the run, the statement performs apt-get remove curl or wget, once you no longer need them.

6. USE fromlatest.io

From Latest will Lint your Dockerfile and check for even more steps you can perform to reduce your image size.

7. MULTI-STAGE BUILDS IN DOCKER

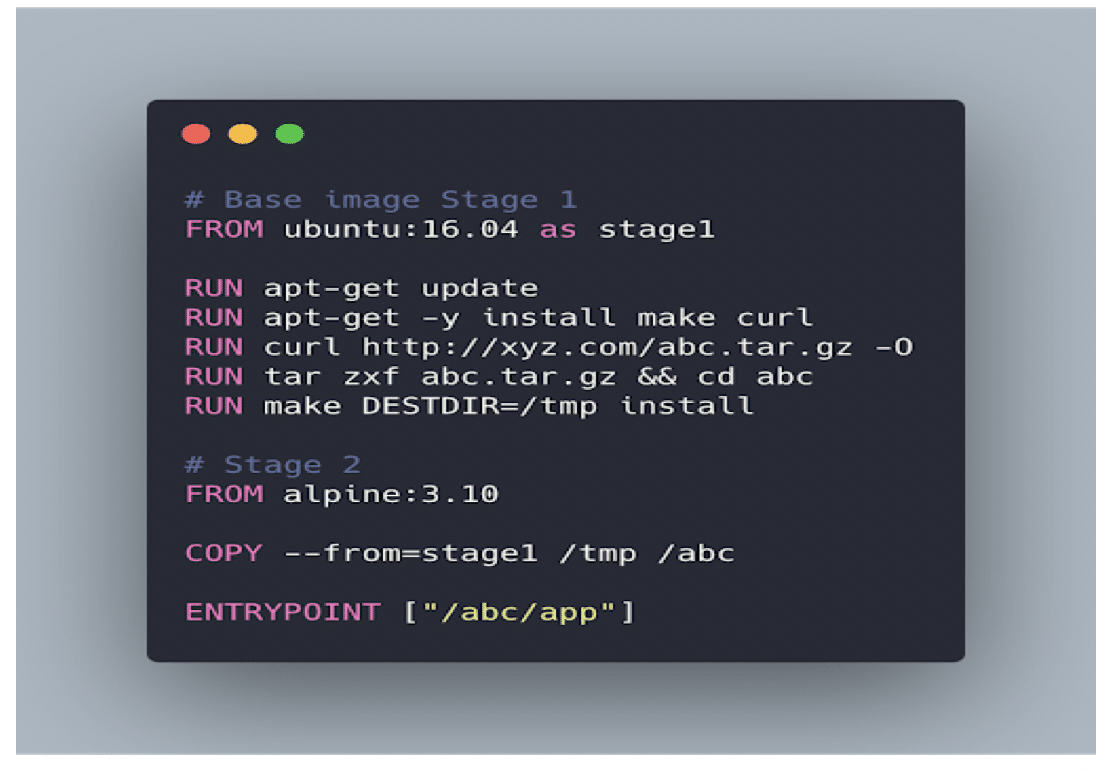

The multi-stage build divides Dockerfile into multiple stages to pass the required artifact from one stage to another and eventually deliver the final artifact in the last stage. This way, our final image won’t have any unnecessary content except the required artifact.

CONCLUSION

Smaller the image size better the resource utilization and faster the operations. By carefully selecting and building image components by following the recommendations in this article, one can easily save space and build efficient and reliable Docker images.

About The Author

Nagesh A

SR Cloud Dev-Ops Engineer | Cloud Control

Senior Cloud DevOps Engineer with more than five years of experience in supporting, automating, and optimizing deployments to hybrid cloud platforms using DevOps processes, CI/CD, containers and Kuberneties in both Production and Development environments