Do You Have Time for Down Time?

When you have enterprise applications and you are responsible for deploying enterprise applications, and you have users or customers – maybe internal employees who are servicing customers – you want to make sure that the application or the service that you’re running is running around the clock right and meeting your SLAs. Of course, you might have downtime during weekends or the like, but only some people have the luxury of having a maintenance window so applications can be taken down for upgrades, patches and such.

However, there are several cases where the site cannot go down. For example, if you have a patient portal and your customers are primarily patients, or if you have a customer service portal for an automobile service, or maybe a bank – or really any establishment where your clients or your customers should always have the flexibility to access your site and services. In such cases, you can’t afford to take any down time so how do we achieve that in the modern world, with the latest technologies that are available out there for hosting your services or your applications?

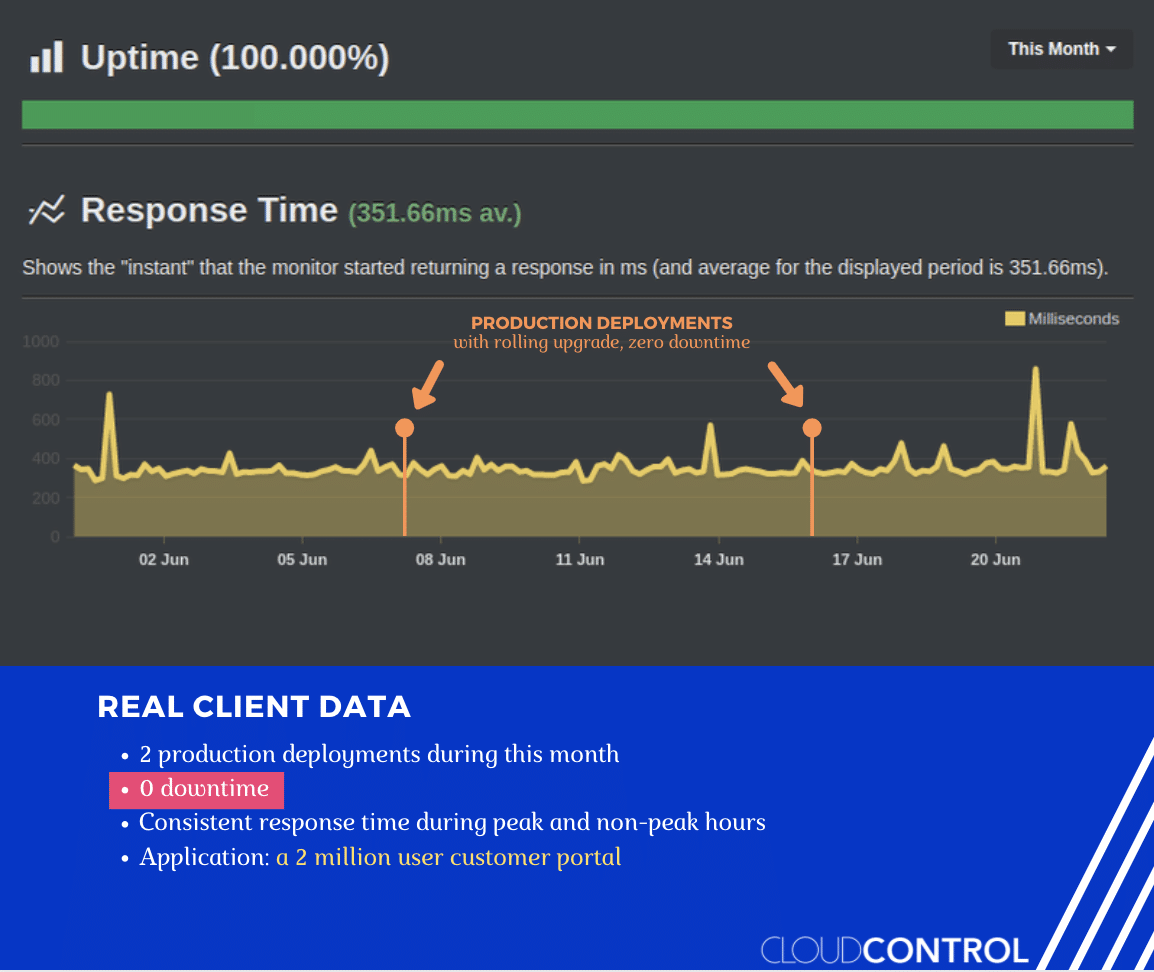

So here, I’m going to talk about how we achieved this for a client; in this case the client is a customer portal with close to about two million customers, who could be logging in anytime — and at times there could be up to 200,000 customers logging in and out of the site at once. Below you will find the actual real time data that we collected from the site:

Here you can see that during the month of June, up until today, there has been no down time. The site was 100% up during the past month, to date, and you can see that in between their production deployments — the two production deployments which happened somewhere around June 8th and then again on June 15 or so. So, while there were two production upgrades that occurred, the site remained up.

In this particular chart, I’d like to highlight the two production upgrades during the month, zero down time, consistent response time irrespective of how many people log into the system, while being used by 2 million customers.

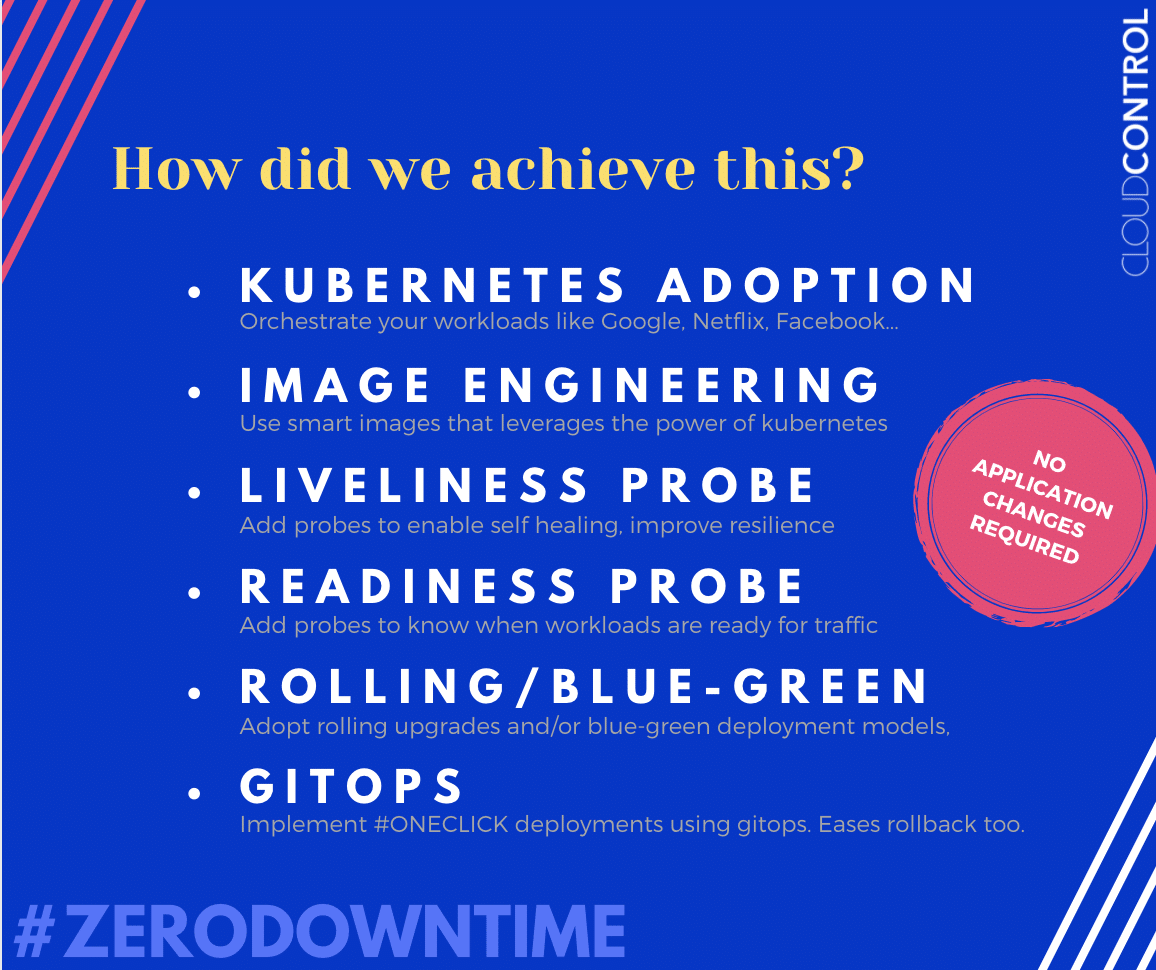

Now how did we achieve this?

-

- Kubernetes Adoption: We started with their existing application which was hosting but having many issues in terms of stability and keeping the SLAs. We took that application without making any changes to it, and we were able to put that in Kubernetes. Kubernetes basically uses the same technologies that the Netflixes/Googles/Facebooks of the world all use — containers. Now, your applications are bundled so that it can run in a much more stable environment consistently across multiple infrastructures from behind the scenes. Kubernetes adoption was one of the most important steps that we took for the client.

- Image Engineering: When we package the application and create the containers (of course, we took the applications and created containers out of it) we make sure that we are using an image that is smart enough to know how the application runs inside it. This doesn’t come with Kubernetes, and it doesn’t come with Docker or the popular containerization technology that most people use. These containers are basically created out of images, and these images can be made smart. Using our platform, we were able to do that. We created and engineered the image in such a way that it is aware of the application’s health. It becomes a kind of bridge between Kubernetes and the application so the image knows how to run the application the best possible way. At the same time, when the application is facing a problem, it communicates with Kubernetes in such a way that Kubernetes now can stop sending the traffic there. Instead of sending traffic to a particular instance of the application whichmight be in distress, it will just point the traffic to another working instance of the application. All this is done through image engineering. Inside the image engineering what we do is (well of course, we do the health checks) implement a liveliness probe.

- Liveliness Probe: We tell Kubernetes on a constant basis whether the application is ready to still continue to take load, or traffic, when a customer visits the website. If the application or the service is capable of taking service or the traffic, then Kubernetes can send the traffic to it. So how does Kubernetes know? Image engineering: we put in sufficient probes inside the image so that Kubernetes easily communicates whether the application is capable of taking the traffic or not.

- Readiness Probe: The readiness probe is very important. Let’s say the application had a problem and Kubernetes restarted it, or when you were doing an upgrade you were doing rolling upgrades, so when that happens, Kubernetes needs to know when the application is actually up and running to take that traffic. That is done through a readiness probe, and we engineered the image again to do that. Now all this is possible only when you’re doing the upgrades if you use rolling upgrades (the alternative option is to do Bluegreen upgrades).

- Rolling/Blue-Green Upgrade: So what the rolling upgrades do is, when you are deploying a new version, it doesn’t kill all instances of services or applications in one shot; instead it will take it down one at a time, upgrade it, make sure that it is ready using the readiness probe, and then it will make sure that it is ready for the liveliness probe. Once it is, you can even apply a factor of time to that and say, “Hey make sure it is ready for 10 seconds”, or “make sure it is ready for 3 minutes” before it receives traffic. So once you configure that along with the rolling upgrade, Kubernetes will be able to handle your workload properly so that it can send the traffic to you when the application or the service is healthy to accept it. Now, as mentioned earlier, Blue-Green is a different type of deployment model. In Blue-Green, you run one cluster of applications, let’s call it version 1.2, so when you go from version 1.2 to 1.3 (it could be a bug fix, it could be an enhancement), we enable what we call a Blue-Green deployment. So when you do an upgrade, it will bring up a new cluster with a new URL which is not known to our customers, but the engineers from within your team will be able to access this new URL, test the application completely, and then send a signal to the load balancer to indicate that it is ready to switch to the new URL. And that is Blue-Green, the other option.

- Gitops: All this is done using gitops. Why use gitops? Gitops is a process by which you can create a repeatable deployment model. That means anytime you want to upgrade or patch or roll back, you can use a git commit where you commit a yammer file or a source file and that will trigger the deployment to happen. In that process, you can do several things. You can make sure that your images are properly created, you can scan for vulnerabilities, you can make sure that all the probes are in place, you can run some tests against the images, and then when all is satisfied, if you wanted to, you could add approvals. Once everything is in place and all the checks are done and all the approvals are done, then using a gitops platform you can deploy it automatically. What our platform then provides is a gitops-based deployment model, or deployment platform that gives you Kubernetes adoption, image engineering for 100% up time (or close to 100% uptime), liveliness probes, readiness probes, option to do either rolling or Blue-Green deployment: all using a gitops-based deployment process.

About The Author

Rejith Krishnan

CEO & Co-founder of CloudControl. Rejith has more than 28 years of experience as a senior FinTech executive specializing in cloud technology automation. Prior to founding CloudControl, Rejith spent 10 years at State Street Corporation as a Senior Architect where he was instrumental in the creation and deployment of State Street’s The Digital Enterprise (TDE). TDE also won multiple awards for an enterprise private cloud platform and Rejith was a coauthor of the patent State Street Corporation was awarded for this initiative.