Overview

Enterprises should leverage AI to stay competitive and relevant in today’s marketplace. AI allows companies to process and analyze data quickly, identify trends, and make decisions more efficiently than traditional methods. AI can also help automate processes such as customer service, marketing campaigns, supply chain management, and logistics. Additionally, leveraging AI can improve operational efficiency and allow for better decision-making at scale. By using AI, enterprises can gain a competitive edge by leveraging the latest technology and gaining insights from their data faster than before.

Use cases

With artificial intelligence (AI), businesses can leverage its capabilities across various areas. From automated customer service and fraud detection to predictive analytics and natural language processing (NLP), AI offers a range of solutions to enhance operational efficiency. Additionally, AI can automate routine tasks, freeing up valuable time for businesses to focus on innovation and growth. Let’s explore some critical applications of AI in business operations.

- Automated customer service: AI bots can provide personalized customer support, such as answering basic inquiries and providing product recommendations.

- Fraud detection: AI algorithms can detect anomalies in customer data and detect fraudulent activity.

- Predictive analytics: AI can analyze large amounts of data to make predictions about future trends and opportunities for the business.

- Natural language processing (NLP): AI algorithms can be used to understand natural language and extract meaningful insights from unstructured data such as customer reviews or emails.

- Automated process automation: AI can automate routine tasks such as invoicing, data entry, document management, etc.

- Image recognition: AI algorithms can identify objects in images or video footage for various applications, including security or inventory management systems.

- Intelligent virtual assistants: AI-powered virtual assistants can help employees with task management and provide customers with more efficient assistance or information about products and services offered by the company.

- Supply chain optimization: AI algorithms can analyze supply chain data to identify efficiencies that will save costs and time while improving quality control measures throughout the supply chain process.

- Predictive maintenance: With access to historical maintenance records, sensors, and machine learning models, predictive maintenance allows companies to detect potential malfunctions in equipment before they occur, reducing downtime and cost of repairs needed when problems do arise

- Enhancing data security: AI technology can be used to monitor networks for potential threats and alert enterprise security teams of suspicious activity, helping to protect their data.

The Challenge

Introducing AI into an enterprise can present challenges, especially when integrating novel technologies. These challenges span technical and organizational realms, requiring careful navigation and strategic decision-making.

From a technical perspective, integrating AI into existing IT infrastructure can be complex due to its intricacies and the requirement for specialized skills. Moreover, training machine learning models necessitates extensive datasets, allocating substantial resources, and dedicating time to pre-processing tasks.

On the organizational side, resistance to change may arise among employees who fear displacement by AI-powered systems. Additionally, executives may need more understanding and confidence to invest in scalable AI implementation. In any technology initiative, meticulous planning and effective communication are vital to adopting AI in an enterprise setting successfully.

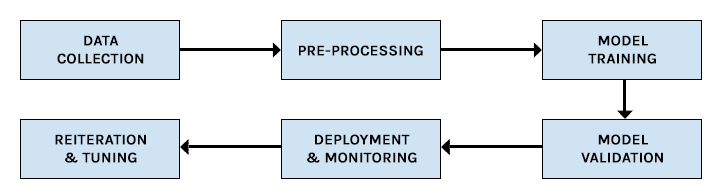

Components of an Enterprise AI System

- Data Collection – Collect data from internal and external sources.

- Data Preprocessing – Clean, organize, and prepare data for analysis.

- Model Training – Train machine learning models using algorithms such as supervised or unsupervised learning.

- Model Validation – Validate model accuracy and performance to ensure the model meets business requirements.

- Deployment & Monitoring – Deploy trained models into production and monitor their real-time performance.

- Reiteration & Tuning – Iterate the model and tune hyperparameters based on new feedback or environmental changes.

AI Tools

- TensorFlow: Provides libraries for data flow programming, machine learning, neural networks, and deep learning.

- PyTorch: Offers an open-source library for deep learning frameworks with a wide range of modules for building AI models.

- Keras: A high-level API allowing users to build neural networks in Python quickly.

- Amazon SageMaker: An end-to-end platform combining pre-built ML algorithms, model-building tools, and deployment capabilities.

- Microsoft Azure Machine Learning Studio: Offers an easy drag-and-drop interface to build machine learning models without needing code knowledge.

- IBM Watson Machine Learning: Platform that enables developers to quickly develop and deploy AI models in the cloud or on-premises using Watson APIs and open-source tools like TensorFlow and Scikit learn.

- H2O: Automates algorithm selection, hyperparameter tuning, and model deployment to enable faster AI model building and evaluation processes.

- Apache Spark MLlib: Open source library for large-scale machine learning tasks, including creating, training, and deploying models in various languages such as Java, Python, Scala, etc..

- BigML: Tool designed to automate everyday tasks within machine learning pipelines such as data preparation and feature engineering, along with providing access to pre-trained models from third parties through its web interface or API integration options available on its platform

- Scikit Learn Library: This popular open-source library provides efficient algorithms for predictive modeling tasks such as classification, regression, clustering, etc.

AI Inference Stage

The inference stage of an AI system is the process by which a computer uses its existing knowledge and data to draw conclusions or make decisions. This includes using data from previous tasks and experiences to make predictions, detect patterns, and identify trends. The AI system may use techniques such as neural networks, machine learning algorithms, and natural language processing to make decisions. Inference can be used in various applications, from autonomous vehicles to medical diagnosis systems.

Implementing AI in Enterprise

Considering the complexities of implementing AI systems, opting for a Kubernetes-based infrastructure is the ideal choice to ensure optimal performance, scalability, and efficiency.

Kubernetes is an open-source container orchestration system that provides organizations with a way to quickly and efficiently deploy, manage, and scale applications. Running AI systems in Kubernetes can offer several advantages.

First, Kubernetes simplifies the deployment process for AI workloads, allowing teams to focus on developing code instead of managing complex infrastructures. Additionally, Kubernetes also offers auto-scaling capabilities that enable companies to quickly adjust their resources based on demand without needing to provision more hardware or software manually.

Kubernetes also makes it easy to monitor and debug AI systems by providing insights into application performance and metrics such as CPU utilization, memory usage, and request latency. This helps organizations identify areas of improvement so they can optimize their models or tweak their algorithms for better results. Finally, Kubernetes is designed for high availability, so AI systems remain available despite server crashes or other issues.

Overall, running AI systems in Kubernetes can provide companies with improved scalability, faster deployments, better monitoring capabilities, and improved reliability, which ultimately leads to higher customer satisfaction levels.

Conclusion

In conclusion, leveraging advanced tools and technologies like H2O, Apache Spark MLlib, BigML, and Scikit Learn Library can significantly enhance the efficiency and performance of AI model building and evaluation. The inference stage of AI systems plays a pivotal role in leveraging existing knowledge and data to draw accurate conclusions and make informed decisions. By implementing AI in an enterprise setting, opting for a Kubernetes-based infrastructure can ensure optimal performance, scalability, and efficiency. Kubernetes simplifies deployment, offers auto-scaling capabilities, facilitates monitoring and debugging, and provides high availability, resulting in improved scalability, faster deployments, better monitoring, and enhanced reliability. These benefits ultimately lead to higher customer satisfaction levels.

About The Author

Rejith Krishnan

Rejith Krishnan is the co-founder and CEO of CloudControl, a startup that provides SRE-as-a-Service. He’s also a thought leader and Kubernetes evangelist who loves to code in Python. When he’s not working or spending time with his two boys, Rejith enjoys hiking in the New England outdoors, biking, kayaking, and playing tennis.